Can deepfake technology be used for good?

AI can believably replace anyone’s face in any video, with the President of the United States. Scary? This is called “deepfake” and AI is getting better at it.

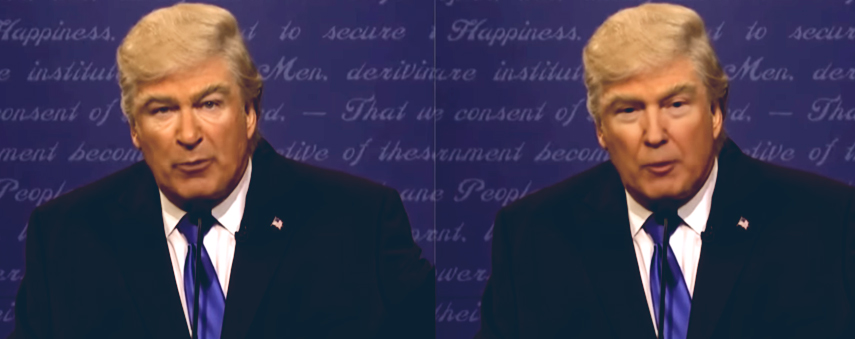

A scenario involving Donald Trump isn’t a random example. In February 2018, a Youtube account called Derpfakes used an AI image swap tool to transpose the Leader of the Free World onto a comedic impression of him by Alec Baldwin on Saturday Night Live. The result was convincing on first glance.

Since then, algorithms have improved. Deepfake takes the face of a person and realistically overlays it over a video. So far, it’s only been used by amateur filmmakers, who use Generative Adversarial Networks (GANs). Two Machine Learning (ML) models combine: one trains on a data set to create a video forgery, whilst the other tries to detect the forgery. The algorithms challenge each other to come up with a result that the deepfake-maker won’t have to iron so many creases from. As the ML algorithms train more data sets, the results improve. ML algorithms, after all, learn from their previous mistakes.

The word “deepfake” is a portmanteau of “deep learning” and “fake”: like “bitmoji” or “freeware”. Software is easy to find; a desktop app called FakeApp launched in 2018 that allowed users to create their own deepfake videos and there are a number of opensource alternatives. However, it’s not as easy to use as something like Photoshop or After Effects.

These neural networks are not specifically designed to recognise faces. Lighting conditions and camera angles can complicate the process, so similar faces are often used; if you have a high-end GPU, the neural network will learn more complex mapping on its own. You can even train more different deepfake algorithms so that each neural network only has to focus on detecting on a specific part of the video.

It’s perhaps no surprise that such powerful software has fallen into the wrong hands already. Inserting your favourite celebrity into a pornographic video is now easier than ever. Pornhub and Reddit have vowed to shut down deepfake videos on their sites, with Scarlett Johansson calling the Internet itself a “vast wormhole of darkness”, after frequent violations of her likeness were used in fake adult videos.

Deepfake is at a stage where it is condemned by all. There are even calls in the UK to make it a specific crime.

How could deepfake develop?

Deepfake fascinates people. It’s fast becoming a genre on YouTube: millions of people have watched deepfake videos of characters in scenes of movies they were never in. Edward Norton’s face has replaced Brad Pitt’s in a famous Fight Club scene in one video, whilst Heath Ledger’s Joker from The Dark Knight has cropped up in a deepfake edit of A Knight’s Tale, a movie that predates Ledger’s iconic Batman villain by seven years.

Deepfake is a work in progress but it’s growing in realism. AI is enabling amateur filmmakers to create almost believable video effects: what could the possibilities look like for film studios?

The potential for the technology behind deepfake could be game-changing for entertainment. Not only could ML be used in cinema but gaming too, to create more realistic effects. Studios could bring in actors based on their target market: imagine casting famous foreign actors for other countries’ versions of a movie.

We may well be in the era of “fake news”: the era of “fake views” beckons.

Netflix experimented with the choose-your-adventure episode of Black Mirror, Bandersnatch, in late 2018. What if you, the viewer, could choose which actors you’d like in the lead roles? ML algorithms can also be used to generate storylines based on what you want to watch. Studios could in the future could offer customisable video stories tailored to you. You could even insert your family and friends into the plot.

Personalised advertising could be an opportunity to businesses too. Choosing a face for a fashion range is a difficult decision but it need not be with deepfake. It would be possible to license a selection of celebrities and target them at different demographics depending on the icons they like.

Humans are visual animals. Faces and expressions communicate to us in no other way and the surface possibilities of deepfake are the tip of the iceberg. Could deepfake be used in criminal reconstructions? Could ML technology be used to reconstruct historical scenes with more accuracy than ever before? Deepfake opens a Pandora’s box of video possibilities but with that could come incredible technological advancements.

Deepfake poses more ethical questions than it answers

There are no easy resolutions to AI dilemmas. The possibility of interactive cinema has so far mainly produced porn. The ability to tell stories with more accuracy has been lost in the fun of re-animating Donald Trump to say things he never said. We may well be in the era of “fake news”: the era of “fake views” beckons.

We’ve been here with images. We still have debates about the moral integrity of Photoshop. Is it right to fix the imperfections of a model? Is it dangerous for amateur digital artists to have to ability to mock up real-looking scenes of powerful people? These arguments rage on over 25 years after Photoshop’s inception and like deepfake, the software is improving, with Adobe itself working with AI into creating a better workflow than ever before.

Whilst there are plenty of drawbacks to photo and video manipulation, however, there is an almost accepted agreement on one thing. Deepfake, like Photoshop before it, is challenging our perception of the world around us. It is making us more critical of what we see. Studying videos for signs of authenticity means that we don’t take content for granted. A lack of trust in the media we used to rely on makes the world a scarier place but with an adjustment period, it can maybe make us wiser.

Newspapers taught us not to believe everything we read. Photoshop taught us not to believe everything we saw. With the majority of Hollywood films already employing unbelievable CGI, how soon before the human race starts scrutinising video content a little more?

It’s a debate that will continue a little longer. Deepfake is undeniably scary and dangerous. Perhaps it’s a good thing though to question authenticity a little more in the modern world.