Google translates hand gestures to speech with sign language AI

Google has announced a new machine learning model for tracking hands and recognising gestures, giving once soundless sign language a voice

There are thousands of spoken languages the world over. Each has its own subtleties and nuances. Developing AI to interpret and translate those can be difficult, and even the most sophisticated translation tools struggle to do so correctly. Sentences can become jumbled, meanings are misread, and colloquialisms are mostly lost on machines.

However, there has been a breakthrough in perceiving and translating the language of the human hand.

Google’s development of “Real-Time Hand Tracking”, which perceives hand movements and gestures, allows for direct on-device translation to speech.

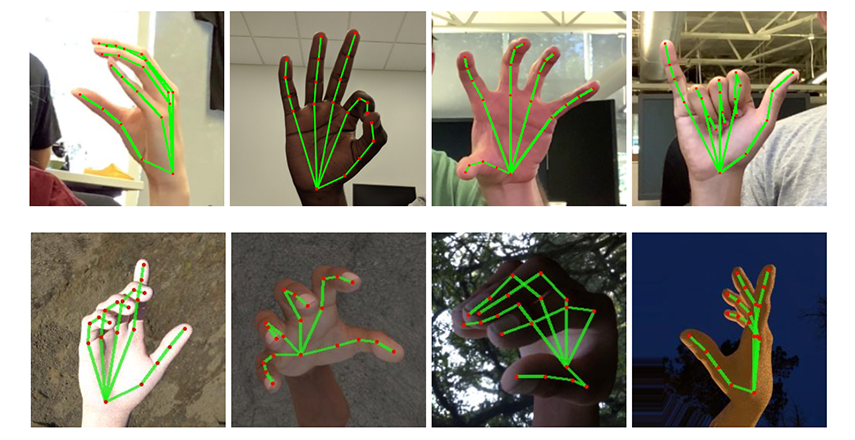

The tech giant has mapped 21 3D keypoints, or coordinates, to around 30,000 real images of hands performing a variety of gestures and shapes.

Using a complex machine learning model, they have created a mixed training schema to synchronise the data from rendered images, real-world images, and hand presence, to give a “hand present classification.”

In a blog post, Google said: “The ability to perceive the shape and motion of hands can be a vital component in improving the user experience across a variety of technological domains and platforms.”

The research and development of this machine learning algorithm could create numerous possibilities, not least for sign language understanding.

Google has not developed a stand-alone app for its algorithm but has published it open-source, allowing for other developers to integrate it into their own tech, a move which is welcomed by campaigners.

There are also potential uses for virtual reality control and digital-overlay augmented reality, or even gesture control functions in driverless vehicles and smart devices.

Assistive tech is making strides as more and more entrepreneurs and engineers enter the market ahead of the consumer curve, with wearables and IoT devices aimed at making the lives of those with assistive needs a little easier.