Artificial intelligence is turning brain signals into speech

Scientists can now read your mind: sort of.

Researchers from the University of California claim to have created a device that turns brain signals into electronic speech. The “brain machine interface” is a neural decoder that maps cortical activity into movements of the vocal tract, with one participant of the study being asked to read sentences aloud, before miming the same sentences with their mouth without producing sound. The researchers described the results as “encouraging”.

Five volunteers had electrodes implanted on the surface of their brains as part of epilepsy treatment. The researchers recorded the brain activity of the study participants reading sentences, before combining these recordings with data on sound creation and how the tongue, lips, jaw and larynx help us speak.

What is Deep Learning?

A deep-learning algorithm was used on the data collected on vocal tract movement and the research from participants’ speech. A deep learning algorithm is a dense artificial neural network, which is in turn inspired by the way that a biological brain works.

Deep learning encompasses machine learning, where machines learn by experience and develop skills without needing the input of their human masters. From colourising images to facial recognition, deep learning is already contextualising the world around it. Converting brain activity into speech is a huge breakthrough, though ordinary speech translations of one language to another are perhaps the best examples of the technology at work.

Deep learning is already contextualising the world around it. Converting brain activity into speech is a huge breakthrough

Deep learning algorithms are actually rethinking the way that we translate languages. Previously, tools such as Google Translate would have translated every word in a sentence individually, as if looking up each word in a dictionary. These days, however, algorithms invented just two years ago can perform to the level of statistical machine translation systems invented 20 years ago, because they can decode sentences using recurrent neural networks (RNN).

RNNs learn patterns in data and output them as encoded sentences. There’s no need to input the rules about human languages because the RNN learns everything it needs to know. This is how deep-learning can solve sequence-to-sequence challenges such as speech translation, whether that’s from one language to another, or from brainwaves into sounds.

How impressive is this study?

Similarly to how online translators began by simply turning individual words from one language to another, scientists have only been able to use AI to analyse brain activity and translate a syllable at a time.

Many who have lost the ability to speak have used speech-generating devices and software. Naturally spoken speech averages 150 words per minute – up to fifteen times more than devices used by motor-neuron disease sufferers – so giving the participants of this study a more effortless flow of speech is a breakthrough.

“Technology that translates neural activity into speech would be transformative for people who are unable to communicate as a result of neurological impairments,” said the neuroscientists in the study, published on April 24th in Nature. “Decoding speech from neural activity is challenging because speaking requires very precise and rapid multi-dimensional control of vocal tract articulators.”

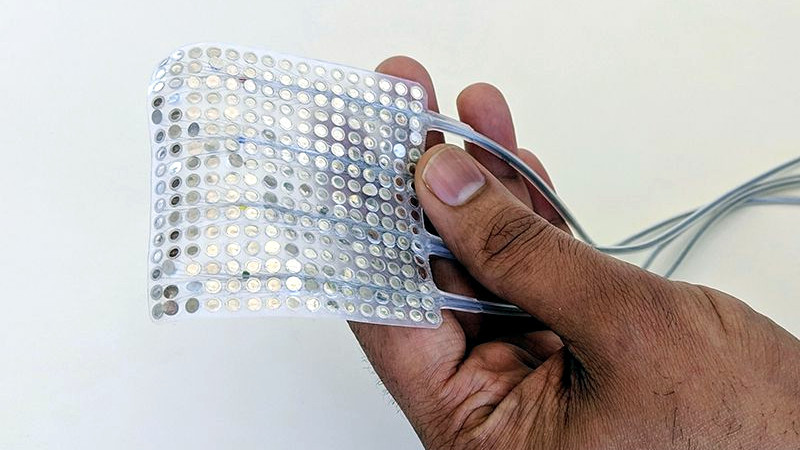

An electronic mesh, which consists of a network of flexible circuits placed into the brain, is now being tested on animals. Elon Musk’s Neuralink company is also developing an interface between computers and the biological brains, using neural lace technology, in what the company describes as, “ultra high bandwidth brain-machine interfaces to connect humans and computers”.

Artificial Intelligence is working on other senses

Vision is another sense that will benefit in the future from reading neural output.

A recent study has looked at how machine learning can visualise perceptual content, by analysing human functional magnetic resonance imaging (fMRI). The feature decoding analysis was made with fMRI activity patterns in visual cortex (VC) measured while subjects so much as imagined visual images. Decoded features were then sent to the reconstruction algorithm to generate an image.

Similarly to the speech research, study into deep image reconstruction from human brain activity is suggests that artificial neural networks can provide a new window into the internal contents of the brain.

It’s perhaps getting ahead of ourselves to suggest that mind-reading is imminent, but it’s a certainty that artificial intelligence and deep learning will provide the human race with a neuromechanical biological system of sorts. “These findings advance the clinical viability of using speech neuroprosthetic technology to restore spoken communication,” the study said.

Photo from https://www.nature.com/